The AI License Graveyard Is Growing

Most organizations right now have at least two AI tools collecting dust in their HR tech stack. The licenses renew automatically. Vendor CSMs send cheerful quarterly emails. And absolutely nobody is logging in.

CHROs tell themselves it's an adoption problem. Maybe teams need better training. Maybe they need executive pressure. Maybe they just need more time.

But here's what's actually happening: organizations are buying AI capabilities before they've done the foundational work those tools require to function. The vendor demo ran beautifully on clean data. Your environment has seven years of inconsistent job codes, duplicate employee records, and performance ratings that changed scoring systems twice. The AI can't parse it. And now you're three months post-purchase with nothing to show for it.

This isn't a training problem. It's a readiness problem. And the gap between what vendors demonstrate and what your infrastructure can actually support is wider than most procurement committees realize until after the contract is signed.

What We See: Four Patterns That Predict AI Tool Failure

Pattern #1: The Demo Runs on Vendor Data, Not Yours

Every AI demo looks flawless. Insights surface instantly. Dashboards populate in real time. Recommendations feel smart and actionable.

Then you try to connect it to your actual systems.

Your HR data lives across multiple platforms with different field names, conflicting definitions, and zero standardization between business units. The AI tool that promised "instant insights" spends six weeks trying to map your data structure and still can't tell the difference between a leave of absence and a termination. What worked perfectly in the controlled demo environment breaks completely when it touches your actual data reality.

Pattern #2: Nobody Talked About Data Cleanup Until After the Sale

Here's the conversation that happens around Month 2 of every failed AI implementation:

Vendor: "We need clean data to train the model."

HR: "Our data is fine."

Vendor: "Can you confirm your job titles are standardized across all business units?"

HR: "...we'll get back to you."

Most AI tools need reasonably structured, consistent data to function. But HR data is famously messy. Job titles that don't match the org chart. Termination dates that conflict between your HRIS and payroll system. Performance ratings that switched from a 5-point scale to a 3-point scale halfway through 2019. Someone has to clean, standardize, and map all of that before the AI can do anything useful.

That work takes 6-9 months. It wasn't in the vendor proposal. It's not in your budget. And it's not getting done.

Pattern #3: "Phase 2" Features That Require Infrastructure You Don't Have

The AI tool you purchased has incredible capabilities. In theory.

Those capabilities assume you've already solved integration challenges that most organizations haven't even identified yet. They assume you have data governance standards in place. They assume your stakeholders agree on basic definitions like "high performer" or "regrettable turnover." They assume your systems talk to each other reliably.

Phase 2 never launches because Phase 1 exposed foundational gaps no one wanted to acknowledge during the buying process. The tool sits in your stack, technically implemented but functionally useless.

Pattern #4: The Quiet Orphaning

Twelve months later, the AI tool is still there. A few people ran a report once. Leadership checked the dashboard during a quarterly review. But nobody's daily workflow actually changed.

The tool isn't delivering value. But it's also not causing active problems, so the renewal happens automatically. HR leadership doesn't want to admit the investment was premature. Finance doesn't want to write off the spend. The vendor stays happy because the license renewed.

And your organization just added another dormant tool to a growing collection of AI capabilities nobody actually uses.

Why This Matters Right Now

The cost of unused AI tools isn't just the license fee.

It's the budget now locked into tools that don't work, leaving no room for solutions that might actually solve real problems.

It's the credibility lost when your "AI strategy" produces no measurable results, making future technology recommendations harder to sell internally.

It's the organizational fatigue that develops when teams are asked to adopt tools that clearly weren't ready for their environment.

It's the deteriorating relationship between IT and HR as both sides blame each other for implementations that were set up to fail from procurement forward.

It's the vendor ecosystem learning they can sell vaporware because most organizations don't track what actually gets used post-sale versus what gets purchased.

The real damage isn't one failed tool. It's establishing a pattern where AI procurement is driven by vendor sales cycles and executive urgency rather than operational readiness. Once that pattern sets in, organizations lose the ability to distinguish between tools that might work and tools that sound impressive in a demo.

And the "AI skepticism" that follows makes legitimate automation nearly impossible to implement later, even when the infrastructure is finally ready.

5 Questions to Ask Before You Buy Another AI Tool

Use this as a screenshot-worthy gut check before your next AI procurement decision.

Question #1: Can this tool run a proof-of-concept on our actual data?

If the demo only works with vendor-provided sample data, you're evaluating theoretical capability, not what will function in your environment. Require the vendor to connect to your systems and process your real data structure during the evaluation phase. If they can't, or if they say "we'll handle that after purchase," that's your answer.

Question #2: Who owns data governance, and have they signed off on this?

AI tools need clean, standardized inputs. If no one has mapped your current data state or confirmed it's usable by the tool you're considering, you're buying a solution that assumes infrastructure you don't have. Before you evaluate features, evaluate whether your data can actually support them.

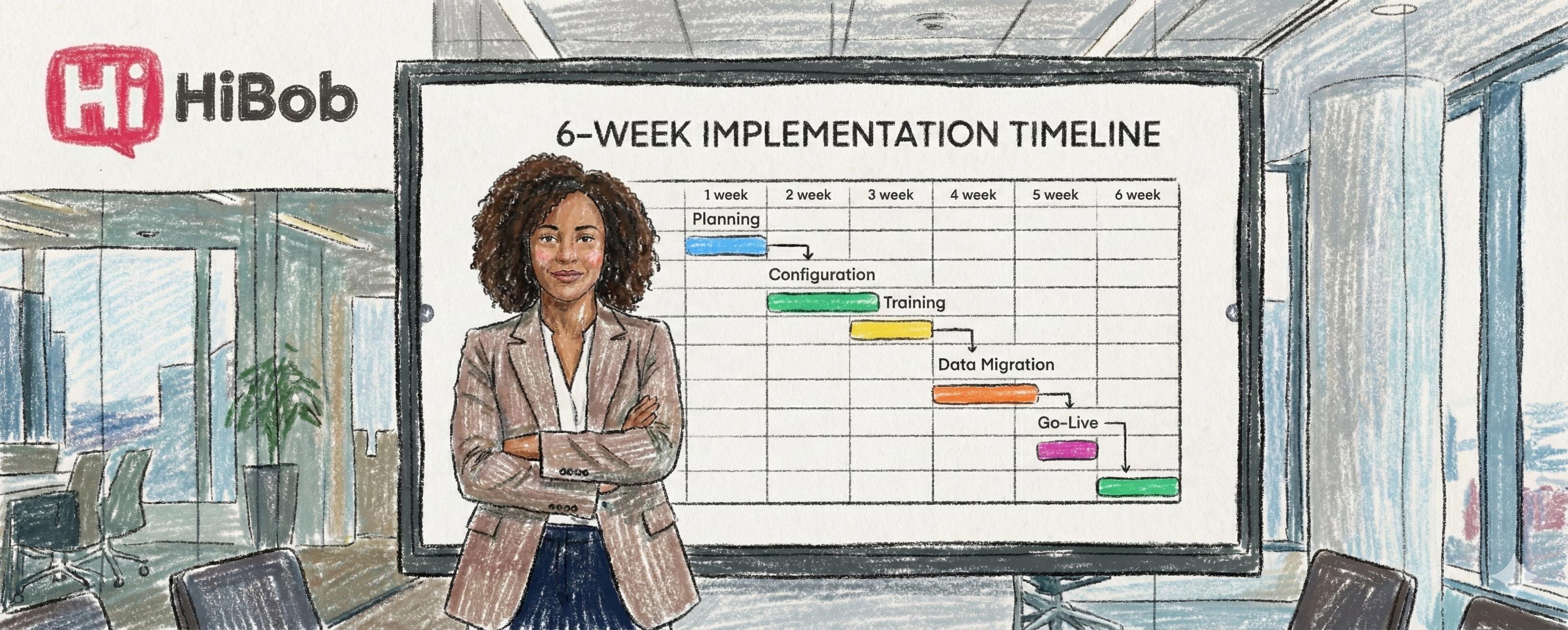

Question #3: What does the realistic timeline look like, including integration and cleanup?

Most vendor timelines assume your data is ready, your systems integrate easily, and adoption happens instantly. Reality includes 3-6 months for data mapping, integration testing, cleanup work, and user onboarding. If the ROI model assumes the tool delivers value in Month 1, the math is wrong.

Question #4: Can we describe the problem this solves in two sentences?

"We need better insights" isn't specific enough. "We want to be more data-driven" isn't a problem statement. If you can't articulate the exact workflow pain point this tool addresses and how you'll measure whether it's solved, you're buying a solution in search of a problem.

Question #5: Has IT confirmed every integration point is feasible?

AI tools rarely work in isolation. They pull data from your HRIS, ATS, performance management system, LMS, and more. If IT hasn't stress-tested every connection point and confirmed feasibility without custom development work, you're assuming technical simplicity that may not exist.

What CHROs Should Do Instead

Start with the workflow problem, not the technology capability

Before you evaluate any AI tool, define the specific operational pain point you're trying to solve. Not "we need better retention insights." Instead: "Our compensation review process takes six weeks because managers don't have current market data and peer comparison benchmarks when they're making decisions, causing delays and inconsistent outcomes."

When the problem is that specific, you can evaluate whether an AI tool actually solves it or just sounds impressive.

Audit your data infrastructure honestly before you talk to vendors

Get a realistic assessment of your current data state before you evaluate any AI solution. Where does your HR data actually live? How consistent is it across systems and business units? What gaps exist in historical data? How much cleanup would be required to make that data usable?

If the answer is "months of work," that's not a reason to avoid AI tools. It's a reason to start with data infrastructure, not the shiny tool that requires infrastructure you don't have yet.

Make proof-of-concepts mandatory, using your data

Vendor demos should connect to your actual systems and process your real data. If a tool can't handle your data structure during the evaluation phase, it won't magically work better after you've signed a three-year contract. This isn't about doubting the vendor. It's about confirming the tool can function in your specific environment before you commit budget.

Build realistic timelines that account for integration, cleanup, and adoption

AI tools don't deliver value on Day 1. Plan for data mapping, integration troubleshooting, the inevitable cleanup work that surfaces mid-implementation, user training, and the adjustment period where teams figure out how the tool fits their workflow.

If your project plan assumes the tool will be operationally useful within 60 days, you're setting up for failure. If the vendor's ROI model assumes instant value, their projections don't reflect your reality.

Define success as usage, not access

"Number of users with login credentials" isn't a success metric. Neither is "tool implemented and available." Define what daily or weekly usage should look like if the tool is actually solving the problem it was purchased to solve. Track whether that usage materializes. And be willing to kill tools that don't get used, even if admitting failure feels uncomfortable.

The Value of Getting This Right

When organizations approach AI procurement with operational clarity instead of vendor urgency, the outcomes look completely different.

HR teams start trusting that new tools will actually work in their environment, instead of assuming every implementation will require months of firefighting.

Budget gets allocated to solutions that solve real problems people experience daily, not theoretical capabilities that sound impressive in slides.

IT and HR collaborate on realistic timelines and shared definitions, instead of blaming each other when integrations fail.

Adoption happens organically because the tool addresses a pain point users already feel, not because leadership mandated usage.

ROI becomes measurable because the problem and solution were clearly defined before procurement, not reverse-engineered after the purchase.

Future AI investments get smarter because the organization learned what readiness actually requires, instead of repeating the same procurement mistakes with different vendors.

The goal isn't to avoid AI tools. It's to stop buying them before you're operationally ready to use them.

Start Here

Considering an AI tool right now? Before you move to contracting, take one hour to map the data infrastructure and workflow clarity that tool actually requires to function. Most AI failures are predictable in procurement if you're asking the right questions. Do the readiness audit first.